1.3: The Evolution and Role of Information Systems

- Page ID

- 28724

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Now that we have explored the different components of information systems (IS), we need to focus on IS's role in an organization and how computer information systems have evolved throughout the years to deliver value to organizations continuously.

From the first part of the definition of an information system, we see that these components collect, store, organize, and distribute data throughout the organization. We can now ask what these components do for an organization to address the second part of the definition of an IS "What is the role of IS in providing value to businesses and individuals as their needs evolve?"

Earlier, we discussed how IS collects raw data to organize them to create new information to aid in running a business. Information Systems (IS) must transform data into organizational knowledge to assist management in making informed decisions. IS plays a crucial role in converting data into useful information that can be used to gain a competitive advantage in today's data-driven business world. Over the years, IS has evolved from running an organization efficiently to becoming a strategic tool for gaining a competitive edge. To fully understand the importance of IS, it is essential to view how it has changed over time to create new opportunities for businesses and meet evolving human needs.

Today's smartphones result from earlier technological advances in making computers smarter, smaller, and lighter. Even though research and experiments happened before 1936, it was not until 1936 that there was concrete proof that the 'Boolean logic", the ability to add two binary digits together, could be implemented in a machine. Computers would not be possible without the ability to calculate in a machine. Since then, we have seen the first computer, Univac I, going from 29000 pounds and costing about $1M each to today's devices, such as Apple's iPhone 14, weighing about 200g and costing about $1500, and with significantly more computing power than the Univac I. It has only taken 71 years since Univac I was delivered! (Figure \(\PageIndex{1}\))

Figure \(\PageIndex{1}\) shows the advances from the 1930s to 1950s, 1970s, 2010s, and 2020s: in the 1930s, Model K Adder demonstrated the proof of Boolean logic (1936), the ability to add two binary digits; in 1950s, first computer, Univac I (1951,) going from 29000 pounds and cost about $1M, in 1970s, Xerox Alto, the first system (1973)to have all of the contemporary graphic User Interface Components and cost $32000 each, in 2010s, the Google Fitbit Ionic (2017) weights ~30gr and cost ~$300, and by 2020s, Microsoft Surface Pro 9 (2022) weight 1.94lb (879gr) and cost ~price0 to $2700, and Apple iPhone 14 (2022) weight 172 to 240g, and cost ~ $1000 to $1500.

As you go through each chapter of the book, keep in mind how each of the components of an IS has evolved to accommodate the significant decrease in size of a computing device, the increase in computing power of the hardware, the complexities of programming the software developers to make these devices powerful, yet still intuitive to use, and to collect and process an enormous amount of data.

The Early Years (the 1930s-1950s)

We may say that computer history came to public view in the 1930s when George Stibitz developed the "Model K" Adder (Figure 1.3.1) on his kitchen table using telephone company relays and proved the viability of the concept of 'Boolean logic,' a fundamental concept in the design of computers. From 1939, we saw the evolution of special-purpose equipment to general-purpose computers by companies now iconic in the computing industry; Hewlett-Packard with their first product HP200A Audio Oscillator that Disney's Fantasia used. The 1940s gave us the first computer program running a computer through the work of John Von Newmann, Frederic Williams, Tom Kilburn, and Geoff Toothill. The 1950s gave us the first commercial computer, the UNIVAC 1, made by Remington Rand and delivered to the US Census Bureau; it weighed 29,000 pounds and cost more than $1,000,000 each. (Computer History Museum, n.d.) (Figure 1.3.1)

Software evolved along with hardware evolution. Rear Admiral Grace Hopper invented a compiler program allowing programmers to enter hardware instructions with English-like words on the UNIVAC 1 Figure \(\PageIndex{2}\). Hopper's invention enables the creation of what we now know as computer programming languages. With the arrival of general and commercial computers, we entered what is now called the mainframe era. (Computer History Museum, n.d.)

Figure \(\PageIndex{2}\): Rear Admiral Grace M. Hopper.

Image by James S. Davis is licensed under CC-PD

The story goes like this: When Rear Admiral Hopper worked on the Mark II, an error was traced to a moth trapped in a relay, coining the term bug. Figure \(\PageIndex{3}\) showed the bug removed and taped to a log book. Here is her biography for more about her life and contribution to information systems.

The Mainframe Era (the 1950s-1960s)

From the late 1950s through the 1960s, computers were seen to do calculations more efficiently. These first business computers were room-sized monsters, with several refrigerator-sized machines linked together. These devices' primary work was to organize and store large volumes of information that were tedious to manage by hand. More companies, such as Digital Equipment Corporation (DEC), RCA, and IBM, were founded to expand the computer hardware and software industry. Only large businesses, universities, and government agencies could afford them, and they took a crew of specialized personnel and facilities to install them.

IBM introduced System/360 with five models. It was hailed as a significant milestone in computing history for it targeted businesses besides the existing scientific customers. Equally important, all models could run the same software (Computer History, n.d.). These models could serve hundreds of users simultaneously through the time-sharing technique. Typical functions included scientific calculations and accounting under the broader umbrella of "data processing."

In the late 1960s, the Manufacturing Resources Planning (MRP) systems were introduced. This software, running on a mainframe computer, allowed companies to manage the manufacturing process, making it more efficient. From tracking inventory to creating bills of materials to scheduling production, the MRP systems (and later the MRP II systems) gave more businesses a reason to integrate computing into their processes. IBM became the dominant mainframe company. Nicknamed "Big Blue," the company became synonymous with business computing. Continued software improvement and the availability of cheaper hardware eventually brought mainframe computers (and their little sibling, the minicomputer) into the most prominent businesses.

The PC Revolution (the 1970s-1980s)

The 1970s ushered in the growth era by making computers smaller- microcomputers- and faster big machines- supercomputers. In 1975, the first microcomputer was announced on the cover of Popular Mechanics: the Altair 8800, invented by Ed Roberts, who coined the term "personal computer." The Altair was sold for $297-$395, came with 256 bytes of memory, and licensed Bill Gates and Paul Allen's BASIC programming language. Its immediate popularity sparked entrepreneurs' imagination everywhere, and dozens of companies quickly made these "personal computers." Though at first just a niche product for computer hobbyists, improvements in usability and practical software availability led to growing sales. The most prominent of these early personal computer makers was a little company known as Apple Computer, headed by Steve Jobs and Steve Wozniak, with the hugely successful "Apple II ." (Computer History Museum, n.d.)

Hardware companies such as Intel and Motorola continued introducing faster microprocessors (i.e., computer chips). Not wanting to be left out of the revolution, in 1981, IBM (teaming with a little company called Microsoft for their operating system software) released their version of the personal computer, called the "PC." Businesses, which had used IBM mainframes for years to run their businesses, finally had the permission they needed to bring personal computers into their companies, and the IBM PC took off. The IBM PC was named Time magazine's "Man of the Year" in 1982.

Because of the IBM PC's open architecture, it was easy for other companies to copy or "clone" it. During the 1980s, many new computers sprang up, offering less expensive PC versions. This drove prices down and spurred innovation. Microsoft developed its Windows operating system and made the PC easier to use. During this period, common uses for the PC included word processing, spreadsheets, and databases. These early PCs were not connected to any network; for the most part, they stood alone as islands of innovation within the larger organization. The price of PCs has become more and more affordable with new companies such as Dell.

Today, we continue to see PCs' miniaturization into a new range of hardware devices such as laptops, Apple iPhone, Amazon Kindle, Google Nest, and the Apple Watch. Not only did the computers become smaller, but they also became faster and more powerful; the big computers, in turn, evolved into supercomputers, with IBM Inc. and Cray Inc. among the leading vendors.

Networks, Internet, and World Wide Web (The 1980s - Present)

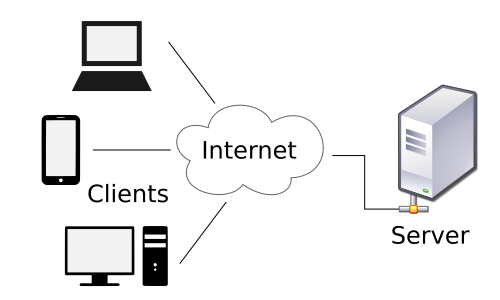

Client-Server

By the mid-1980s, businesses began to see the need to connect their computers to collaborate and share resources. This networking architecture was referred to as "client-server" because users would log in to the local area network (LAN) from their PC (the "client") by connecting to a powerful computer called a "server," which would then grant them rights to different resources on the network (such as shared file areas and a printer). Software companies began developing applications that allowed multiple users to access the same data simultaneously. This evolved into software applications for communicating, with the first prevalent use of electronic mail appearing at this time.

This networking and data sharing all stayed within the confines of each business for the most part. Electronic data was shared data between companies; this was a very specialized function. Computers were now seen as tools to collaborate internally within an organization. These computers' networks were becoming so powerful that they replaced many of the functions previously performed by the larger mainframe computers at a fraction of the cost.

During this era, the first Enterprise Resource Planning (ERP) systems were developed and run on the client-server architecture. An ERP system is a software application with a centralized database that can be used to run a company's entire business. With separate modules for accounting, finance, inventory, human resources, and many more, ERP systems, with Germany's SAP leading the way, represent state of the art in information systems integration. We will discuss ERP systems as part of the chapter on Processes (Chapter 9).

Networking communication, along with software technologies, evolve through all periods: the modem in the 1940s, the clickable link in the 1950s, the email as the "killer app' and now iconic "@" the mobile networks in the 1970s, and the early rise of online communities through companies such as AOL in the early 1980s. First invented in 1969 as part of a US-government-funded project called ARPA, the Internet was confined to use by universities, government agencies, and researchers for many years. However, the complicated way of using the Internet made it unsuitable for mainstream use in business.

One exception to this was the ability to expand electronic mail outside the confines of a single organization. While the first email messages on the Internet were sent in the early 1970s, companies who wanted to expand their LAN-based email started hooking up to the Internet in the 1980s. Companies began connecting their internal networks to the Internet to communicate between their employees and employees at other companies. With these early Internet connections, the computer began starteding from a computational device to a communications device.

Web 1.0 and the Browser

In 1989, Tim Berners-Lee from the CERN laboratory developed an application (CERN, n.d.), a browser, to give a more straightforward and more intuitive graphical user interface to existing technologies, such as clickable links, to make the ability to share and locate vast amounts of information readily available to the mass in addition to the researchers. We call this the World Wide Web (or Web 1.0). This invention became the launching point of the growth of the Internet as a way for businesses to share information about themselves and for consumers to find them easily.

As web browsers and Internet connections became the norm, companies worldwide rushed to grab domain names and create websites. Even individuals would create personal websites to post pictures to share with friends and family. Users could create content on their own and join the global economy for the first time, a significant event that changed the role of IS for both businesses and consumers.

In 1991, the National Science Foundation, which governed how the Internet was used, lifted restrictions to allow its commercialization. These policy changes ushered in new companies establishing new e-commerce industries, such as eBay and Amazon.com. The fast expansion of the digital marketplace led to the dot-com boom through the late 1990s and then the dot-com bust in 2000. An essential outcome of the Internet boom period was that network and Internet connections were implemented worldwide and ushering in the era of globalization, which we will discuss in Chapter 11.

The digital world became more dangerous as more companies and users connected globally. Once slowly propagated through the sharing of computer disks, computer viruses, and worms could now grow with tremendous speed via the Internet and the proliferation of new hardware devices for personal or home use. Operating and application software had to evolve to defend against this threat. A whole new computer and Internet security industry arose as the threats increased and became more sophisticated. We will study information security in Chapter 6.

In 1969, The University of California, Los Angeles, the Stanford Research Institute, the University of California, Santa Barbara, and the University of Utah were connected on the ARPANET, the technical infrastructure for the Internet (Computer History Museum, n.d.)

Web 2.0 and e-Commerce

Perhaps, you noticed that in the Web 1.0 period, users and companies could create content but could not interact directly on a website. Despite the Internet's bust, technologies continue to evolve due to increased customer needs to personalize their experience and engage directly with businesses.

Websites become interactive; instead of just visiting a site to find out about a business and purchase its products, customers can now interact with companies directly, and most profoundly, customers can also interact with each other to share their experience without undue influence from companies or even buy things directinstantlyeach other. This new type of interactive website, where users did not have to know how to create a web page or do any programming to put information online, became known as Web 2.0.

Web 2.0 is exemplified by blogging, social networking, bartering, purchasing, and posting interactive comments on many websites. This new web-2.0 world, where online interaction became expected, significantly impacted many businesses and even whole industries. Some industries, such as bookstores, found themselves relegated to niche status. Others, such as video rental chains and travel agencies, began going out of business as online technologies replaced them. This process of technology replacing an intermediary in a transaction is called disintermediation. One such successful company is Amazon which has disintermediated many intermediaries in many industries and is one of the leading e-commerce websites.

When Jeff Bezos, the founder of Amazon.com, learned that the future of the Internet would grow by 2300%, he created a small list of five products that could be marketed online: compact discs, computer hardware, computer software, videos, and books. He decided to go with the idea of selling books online because he believed there was a strong worldwide demand for books, the price of books was low, and then an ample large supply of books and titles to choose from. He wanted to build the largest bookstore online and named the company 'Amazon,' as in the Amazon River, the biggest river in the world. The company opened for business online on July 16, 1995. The company continues to grow beyond selling books into many new industries, such as web services, autonomous vehicles, gaming, and groceries (Wikipedia, 2022.) Amazon went public on May 15, 1997, and the IPO price was $18.00, or $0.075, adjusted for the multiple stock splits between June 2, 1998, through June 3, 2022 (Amazon.com, 2022). As of October 31, 2022, Amazon has a market cap of $1.045T and $102.44 per share (Yahoo Finance, 2022.)

The Post PC and Web 2.0 World

After thirty years as the primary computing device used in most businesses, PC sales are beginning to decline as tablets and smartphones take off. Like the mainframe before it, the PC will continue to play a key role in business but will no longer be the primary way people interact or do business. The limited storage and processing power of these mobile devices is being offset by a move to "cloud" computing, which allows for storage, sharing, and backup of information on a massive scale.

As the world became more connected, new questions arose. Should access to the Internet be considered a right? What is legal to copy or share on the Internet? How can companies protect private data (kept or given by the users)? Are there laws that must be updated or created to protect people's data, including children's data? Policymakers are still catching up with technological advances even though many laws have been updated or created. Ethical issues surrounding information systems will be covered in Chapter 12.

Users continue to push for faster and smaller computing devices. Historically, we saw that microcomputers displaced mainframes, and laptops displaced (almost) desktops. We now see that smartphones and tablets are replacing desktops in many situations. Will hardware vendors hit the physical limitations due to the small size of devices? Is this the beginning of a new era of invention of new computing paradigms such as Quantum computing, a trendy topic we will cover in more detail in Chapter 13?

Tons of content have been generated by users in the web 2.0 world, and businesses have been monetizing this user-generated content without sharing any of their profits. How will the role of users change in this new world? Will the users want a share of this profit? Will the users finally have ownership of their data? What new knowledge can be created from the massive user-generated and business-generated content?

Below is a chart showing the evolution of some of the advances in information systems to date.

|

Era |

Hardware |

Operating System Software |

Applications |

|---|---|---|---|

|

Early years (1930s) |

Model K, HP's test equipment, Calculator, UNIVAC 1 |

The first computer program was written to run and store on a computer. |

|

|

Mainframe (1970s) |

Terminals connected to a mainframe computer, IBM System 360 Ethernet networking |

Time-sharing (TSO) on MVS |

Custom-written MRP software Email became 'the killer app' |

|

Personal Computer (mid-1980s) |

IBM PC or compatible. Sometimes connected to the mainframe computer via an expansion card. Intel microprocessor |

MS-DOS |

Email became 'the killer app' WordPerfect, Lotus 1-2-3 |

|

Client-Server (the late 80s to early 90s) |

IBM PC "clone" on a Novell Network. Apple's Apple-1 |

Windows for Workgroups, MacOS |

Microsoft Word, Microsoft Excel, |

|

World Wide Web (the mid-90s to early 2000s) |

IBM PC "clone" connected to the company intranet. |

Windows XP, MacOS |

Microsoft Office, Internet Explorer, Chrome |

|

Web 2.0 (mid-2000s to present) |

Laptop connected to company Wi-Fi. Smartphones |

Windows 7, Linux, MacOS |

Microsoft Office, Firefox, social media platforms, blogging, search, texting |

|

Web 3.0 (today and beyond) |

Apple iPad, iWatch, robots, Fitbit, watch, Kindle, Nest, semi/autonomous cars, drones, virtual reality goggles Artificial Intelligence bots, language models |

iOS, Android, Windows 11 |

Mobile-friendly websites, more mobile apps eCommerce Metaverse Siri Open AI, chatGPT, etc. |

We seem to be at a tipping point of many technological advances that have come of age. The miniaturization of devices such as cameras, sensors, faster and smaller processors, software advances in fields such as artificial intelligence, combined with the availability of massive data, have begun to bring in new types of computing devices, small and big, that can do things that were unheard in the last four decades. A robot the size of a fly is already in limited use, a driverless car is in the 'test-drive' phase in a few cities, among other new advances to meet customers' needs today and anticipate new ones for the future. "Where do we go from here?" is a question that you are now part of the conversation as you go through the rest of the chapters. We may not know exactly what the future will look like, but we can reasonably assume that information systems will touch almost every aspect of our personal, work-life, local and global social norms. Are you prepared to be an even more sophisticated user? Are you preparing yourself to be competitive in your chosen field? Are there new norms to be embraced?

References

Amazon.com, Inc. (AMZN). Retrieved October 31, 2022, from finance.yahoo.com

CERN. (n.d.) The Birth of the Web. Retrieved from public.web.cern.ch

Timeline of Computer History: Computer History Museum. (n.d.). Retrieved July 10, 2020, from www.computerhistory.org